"An ancient tower looming above a city":

I've been developing a short Dungeons and Dragons campaign recently, as part of a first attempt at acting as a dungeon master.

I spent some time looking for reference images to use to set the scene and create an atmosphere for the story, but they are never quite what I'm looking for, though often they're good enough to paint a scene.

However, recently I came across Taming Transformers for High-Resolution Image Synthesis and the VQGAN+CLIP notebook by Katherine Crowson which is available for easy reuse as a python application.

GANs and various forms of image synthesis aren't particularly new, but a well packaged library for running them on a variety of high-quality models is. VQGAN is Vector-Quantized Generative Adversarial Network, a variation on the earlier VQVAE that aims to maintain higher perceptual quality. CLIP is Contrastive Language-Image Pre-training and is used to identify images based on natural language.

The VQGAN+CLIP scripts bundle these together, allowing you to define natural language prompts that are then used to generate synthetic images using VQGAN.

"Girl in long coat running down alley":

Using the Imagenet model you can generate some brilliant fantasy images that I feel make excellent prompts for the theatre of the mind - a term used to describe how RPG players must imagine the world in which their characters are in. This relies on descriptions by a GM, audio prompts and reference images. It's important to strike a balance, to provide just enough cues to let the player's imagination take over.

"A wizard looking at shelves of potions.":

I think the synthetic images are perfect for this. They don't prescribe too specific scene as they lack detail but they have enough information to act as recognisable prompts.

"A purple haired girl riding a dragon":

The use of prompts such as "surreal", "CGI", "unreal engine" and colour palettes can be a great way to build a collection of images with a consistent theme. For example, the same prompt with different keywords as secondary prompts (0.75 weighting):

"A wizard stands before a dragon | sketch":

"A wizard stands before a dragon | unreal":

"A wizard stands before a dragon | photorealistic":

"A wizard stands before a dragon | blueprint":

You can even weight and chain multiple prompts so that certain elements become more prominent, allowing you a large degree of customisation.

Results can vary a lot and it can take some work to find the prompts that produce a satisfying image. The work is also computationally expensive, my RTX 2080 with 8GB VRAM can only produce 380x380 images and each takes about 4 minutes for ~250 iterations.

"Cats having an auction.":

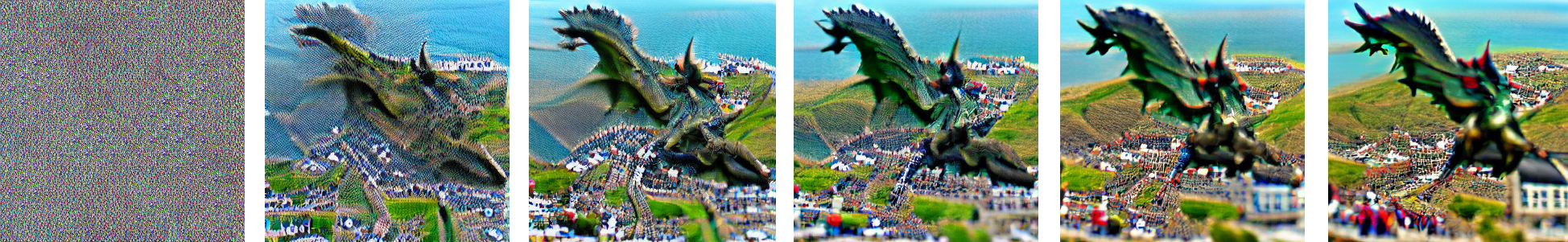

It's also interesting to see the development of details over iterations.

"Dragon flying over Aberystwyth tiltshift":

I'm really looking forward to exploring this use of GANS more to see how far it can go. It's easy to see a world where these images can be generated in milliseconds, in much greater detail, where story telling can be highly interactive and immersive. Combined with the likes of GPT, you could have entirely dynamic games with dialogue and imagery generated in response to user input. The mind game from Ender's Game comes to mind.